How exactly do search engines work?

Table of Contents:

- How exactly do search engines work?

- How do search engines use bots (Googlebot) to discover pages and blog posts?

- How do search engines determine page rankings?

- How do search engines identify the best possible answer to appear at the top of Google’s search results?

- How can an SEO agency like ours improve a business’s ranking?

Introduction

Search engines are powered by highly sophisticated mathematical algorithms and artificial intelligence. When you enter a website query, you’re presented with a page offering a highly relevant answer. But you already knew that, right?

Businesses across the South West of England, especially in areas like Bath and Bristol, will be interested in how search engines rank pages, how their company can reach the first page of Google, attract more customers, and ultimately generate more sales. We are experts in helping businesses across the South West achieve this.

How exactly do search engines work?

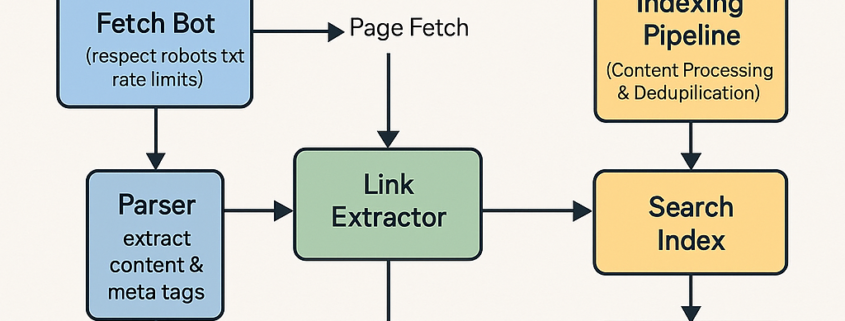

Crawling:

This involves discovering new pages and blog posts on the internet.

Without crawling, search engine indexes would become outdated as no new information would be included.

Crawling is therefore essential, and businesses must ensure that pages can be crawled.

For example, if a “noindex” tag is accidentally left on, search engines can’t crawl that page.

Indexing:

When search engines find well-written content marketing, they index it.

Businesses must remember that not all pages get indexed. If the content is poorly written or of low quality, it won’t be indexed.

For example, if you sell products like e-bikes, you’re competing against thousands of other businesses, making high-quality content essential for indexing.

Ranking:

Ranking is complex as pages are evaluated based on over 200 SEO ranking factors.

For instance, a comprehensive, well-written article without quality backlinks may never reach the first page of search results.

If you’re competing against global brands, these brands produce high-quality content marketing written by experts and typically have extensive backlink profiles, sometimes over 30,000 links.

Such businesses have a higher chance of ranking well.

Thus, ranking requires a combination of high-quality content marketing, technical SEO, and strong backlinks.

How do search engines discover new pages and blog posts?

Search engines use bots, also called spiders, which follow backlinks to discover new websites and content marketing. Once a page is found, internal links help crawl additional content.

Crawl depth depends on your website’s crawl budget.

Important websites receive extensive crawling, while less critical websites get less crawling.

What prevents search engines from crawling and indexing a page?

Sometimes, a “noindex” tag might be accidentally left, preventing indexing entirely.

Crawl Budget:

The crawl budget refers to the amount of time and resources a search engine spends crawling your business’s blog posts and pages.

Businesses often preserve crawl budgets by placing “noindex” tags on pages they don’t want indexed, like CMS login pages.

This ensures that crawling resources focus on valuable pages, such as newly written content marketing.

The two main factors influencing crawl budget are:

Crawl demand: How popular is your website? Popular sites, such as the BBC, have high crawl demand.

Crawl capacity: How quickly your website responds to crawler requests.

Site Maps:

Create a detailed flow diagram explaining how having a sitemap enhances business SEO, specifically for companies in Bristol. Every Bristol business aiming to improve its SEO should have an updated sitemap, essentially a “shopping list” that indicates the pages and blog posts the business wants indexed.

How do search engines determine a page rankings?

After crawling and indexing, search engines use SEO ranking factors to determine page positions.

Pages with quality backlinks and strong on-page SEO typically rank highly.

Conversely, pages lacking backlinks, or having thin content rarely rank well.

Are there any other factors that can prevent a page from getting indexed?

Search engines don’t crawl and index every page.

Pages deemed spam or containing thin content won’t be indexed.

Indexed pages require high-quality content and a strong E-E-A-T score (Experience, Expertise, Authoritativeness, Trustworthiness). Businesses publishing spam or low-quality content risk not being indexed.

HTTP Status Code Errors:

Pages returning HTTP errors (4xx or 5xx) prevent indexing.

For example, broken links causing 404 errors can’t be crawled and indexed.

The “noindex” tag:

A “noindex” tag left on a page prevents crawling and indexing.

Submitting your Site for indexing via Google Search Console:

You can manually submit URLs for indexing, speeding up crawling and indexing compared to waiting for natural crawling.

How Our Agency Can Help:

We have partnered with South West businesses for years, employing knowledgeable SEO consultants in Bristol with over 10 years of experience.

We know how to get companies on the first page.

Prices for local and organic SEO services start at just £ 1,000 per month, making them affordable for SMEs.

SEO is a slow process requiring long-term investment.

Even medium-competition industries can sometimes take over six months to rank on the first page of the search results.

However, we have a 100% success rate—every business we’ve worked with has achieved first-page rankings. For a no-obligation quote, call us today.